The internet is filled with content related to software testing. There are also an overwhelming set of test types, related to the scope of testing. While developers are highly focused on scopes closer to the code itself, the real power comes from scopes closer to the users.

For the purposes of this discussion, we will define and clarify what some of the terms mean. Additionally, we are going to exclude non-functional testing, such as performance and resiliency testing, from this discussion and stick to functional tests.

- Unit Tests – These are very small tests focused on a single method/function within an application. They should run quickly and provide a narrow focus on “does the code work”. If your unit tests start using functionality from more than one class, this should be a sign your unit test is too big.

- Functional Tests – These are small tests focused on a small set of classes that when combined create some sort of testable functionality. Functional tests are not focused on satisfying a user story in its entirety but rather a small piece of functionality that is complex but bigger than what can be covered in a unit test. Functional tests should not have any external dependencies, such as connecting to a database or messaging bus.

- Integration Tests – I think of these as functional tests plus integration with external dependencies, such as databases or messaging. This scope is related to aspects like “the SQL statements return the values I expect” and “the message I published to a topic is what I expected”. These will require connectivity to dependencies, either locally on the developer workstation or remotely to a development environment.

- Acceptance Tests – These tests are directly reflective of actual features, fully implemented. It’s a test encompassing the entire application stack, from external interface down.

- Acceptance tests, when passing, should truly reflect the answer to a single question: Does the application work correctly?

Why Acceptance?

Acceptance as a noun has two meanings according the dictionary.

- the action of consenting to receive or undertake something offered.

- the action or process of being received as adequate or suitable

When releasing software, there is always an implied contract between IT and the business. Typically the IT organization delivers something of value (new features, a bugfix, a hotfix, etc) and it’s ultimately the requesting party’s responsibility to accept the change. In most of my customers, the process of business acceptance is a three step “trust, but verify” procedure. The development team tests the changes, then a separate QA team independently tests the changes and finally, the software is released to a UAT (User Acceptance Testing) environment where the business users will go through an effort to verify the functionality prior to releasing to production.

This pattern creates two pieces of organizational dysfunction. First, it creates silos of responsibilities which can result in “passing the buck”. If something breaks, development teams can blame the QA team for not testing well enough. The QA team can blame the users for not testing well enough. This culminates in the ultimate issue of loss of trust by the users of the QA and dev teams. The UAT cycles get longer and more frustrating. The deliveries slow down.

The second piece of organizational dysfunction is duplication of work. The first part of the duplication is the development team, QA team and users end up writing or maintaining large overlapping pieces of testing. They test the same things but in different ways. The developers are writing code based testing focused on the application code. The QA team typically has a set of both code based tools, but also sets of user scripts which are manually executed. Meanwhile, the users are solely based in the user script world because they do not have the same technical skillsets the developers and QA teams do. The second piece of the duplication of work is when a bug is found late in the testing process which requires a code change. This effectively nullifies all test results and forces the organization to start over from scratch.

The question everyone should be asking is why there isn’t a better way to achieve UAT value in a single, repeatable process. Can’t the users come together with IT to help design and deliver a codified set of repeatable tests which will provide the same level of confidence that the existing process does without the dysfunction?

The answer: it depends.

Layers of Testing

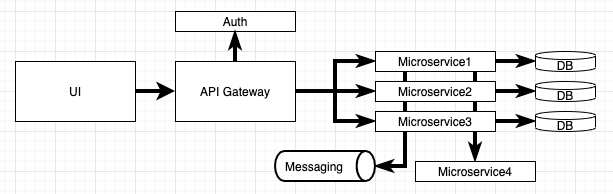

The first problem to identify is areas of responsibilities. Let’s look at a very simple architecture.

The way users would test this system is through the UI. However, there may be significant gaps in test execution. Perhaps there is an event driven process that doesn’t have a UI trigger? Regardless, the ability to drive all testing through the UI is something very few companies have the ability to achieve. Additionally, if the Microservice1 team is a java based API team, then expecting them to write Selenium tests to cover the API tests is simply not feasible both due to the level of expertise needed along with the way features are rolled out. The tests need to be layered and combined for completeness, which will allow teams to independently deliver value quickly.

Inverting the Triangle

Developers tend to start at the bottom (unit tests) and then work their way up (unit->functional->integration->acceptance). As they move up, the volume of tests decrease. They should actually turn that pyramid upside down. I want as many acceptance tests as possible and I want those to cover as much of the codebase as possible. The only reason I should write smaller tests, such as functional or unit tests, should be for very complex classes or scenarios that help me validate the functionality quickly.

Application developers can get too narrowly focused on the application code in a single class. While it’s a no brainer to say the actual code logic needs to work, it’s only a small piece of the pie. From the bottom up, it’s about layers of validation. However, the concentration of efforts can sometimes be too heavily focused on unit and integration testing. If the developer is going to spend the effort creating tests, we want that effort to kill as many birds with one stone. Why write 4 levels of testing when one level will suffice?

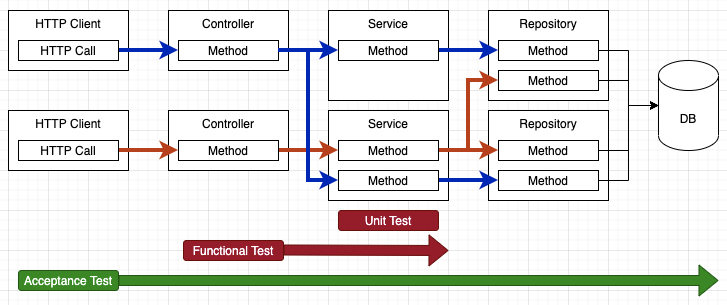

Too many times, a developer will write unit tests at the controller and service levels, mocking out the repository and service respectively to isolate the functionality in that particular class’ method. Sure it works, and yes, it provides value but for the busy developer with a pressing backlog managed by a feature hungry business, I want a bigger bang for my effort.

To illustrate the point, we can look at a traditional web service based architecture.

If I’m a developer and I can write a single test that provides code coverage across the Controller, Service, Repository and Database, why would I want to produce and maintain what might be 5-10 unit tests along with all of the required mocking infrastructure? Additionally, if there is code that cannot be covered with these types of tests, is the code even necessary?

The unit-test-first developer mindset is based on the reality that integration tests were not able to be executed on a developer’s desktop. With containers, that changes things.

Containers to the Rescue

With containers, there are very few technical limitations1 in regards to integration testing on the developer workstation. Using containers, I can run multiple databases, several messaging brokers and more. Once a developer has the capability to run full acceptance tests on a workstation, they now have the power of compounding scope of the tests. Since I am running the test from outside the application, I can test the entire stack. If there are technical limitations regarding the localization of all needed dependencies and even if a developer is forced to mock all of these interfaces, the effort should still be primarily focused on building and maintaining tests that are focused on compounding.

In an ideal world, as a developer, my first testing priority should be 100% acceptance test coverage on all external interfaces. If I am building a REST API, then I want a suite of tests which execute as if the tests are a consumer of my API and I want those tests to cover every possibility. We all know that there are always going to be gaps in testing due to the exponential nature of possible scenarios, but there is absolutely no reason why we couldn’t expect the test suite to at least cover all of the endpoints with at least one vanilla test.

But wait, there’s more. If the acceptance test suite can be executed against the fully built container, the value compounds even further. Now the acceptance tests cover not only the application code, but will also cover hygiene related changes. Need to update some libraries your application uses? The acceptance tests will cover it. Going to update the application container’s base image? It covers that too. It’s the ultimate source of truth for the container validity. The left shift of the acceptance tests will result in less back-and-forth, reduced silos and ultimately less context switching for the development team.

Footnotes

1 The limitations typically stem from the usage of legacy databases. Some contain too much business logic in the form of stored procedures and the actual source code for the databases are not stored in way that is easily reproducible using something like Flyway or EntityFramework. While it’s easier said then done, if your company cannot quickly and easily replicate the structure and function (schemas, stored procs, functions, etc) along with a set of viable test data for a database that’s in production, then you should prioritize the effort to make it happen.

Additionally, in a heavy microservices based architecture, your microservice might depend on a number of other microservice calls to deliver the functionality. This is still going to be a limitation because it’s very difficult to get a developer desktop to run an entire development environment. Mock accordingly.